|

| A Survey of Poisoning Attacks and Countermeasures in Recommender

Systems |

|

Awesome RecSys Poisoning

|

|

|

|

I. Introduction

|

|

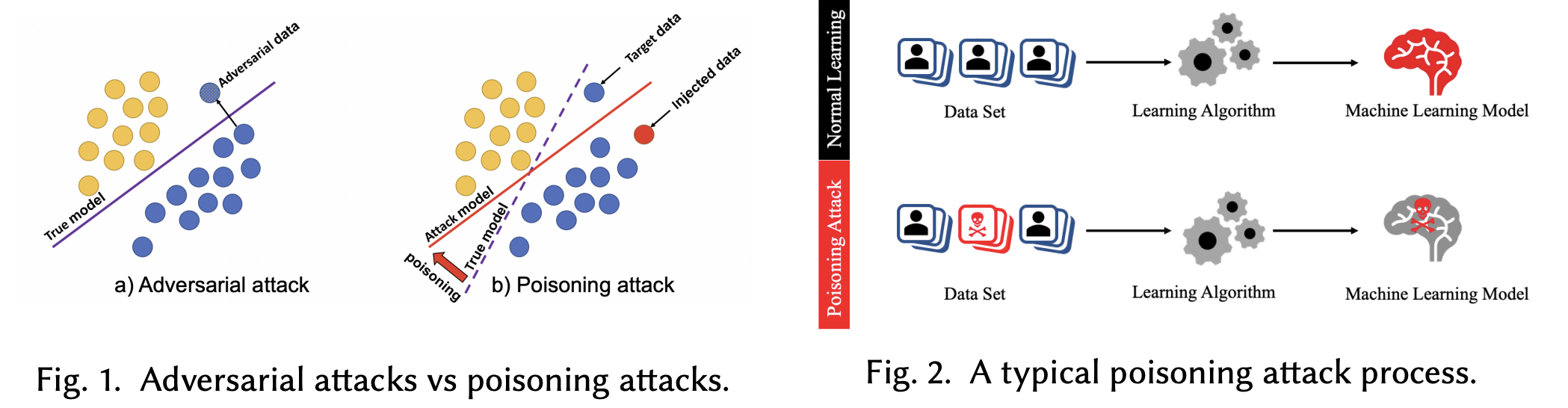

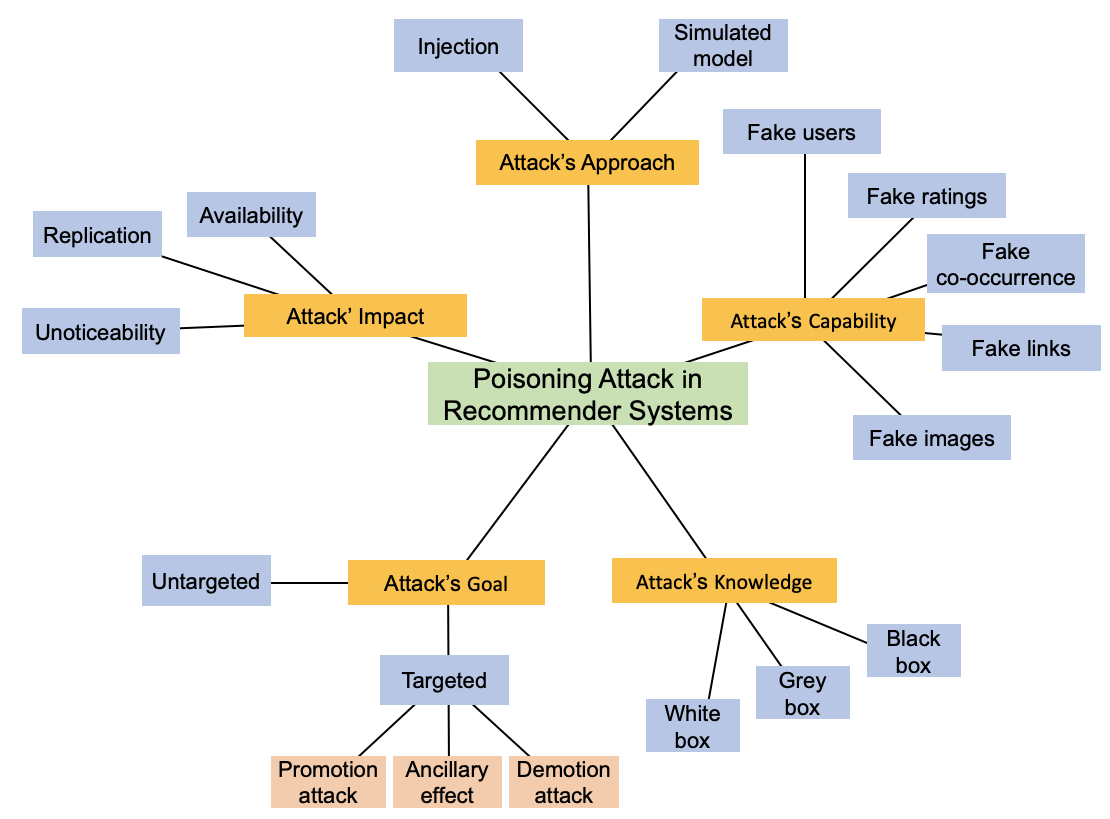

Recommender systems have become an integral part of online services to help

users locate specific information in a sea of data. However, existing studies

show that some recommender systems are vulnerable to poisoning attacks,

particularly those that involve learning schemes. A poisoning attack is where an

adversary injects carefully crafted data into the process of training a model,

with the goal of manipulating the system's final recommendations. Based on

recent advancements in artificial intelligence, such attacks have gained

importance recently. At present, we do not have a full and clear picture of why

adversaries mount such attacks, nor do we have comprehensive knowledge of the

full capacity to which such attacks can undermine a model or the impacts that

might have. While numerous countermeasures to poisoning attacks have been

developed, they have not yet been systematically linked to the properties of the

attacks. Consequently, assessing the respective risks and potential success of

mitigation strategies is difficult, if not impossible. This survey aims to fill

this gap by primarily focusing on poisoning attacks and their countermeasures.

This is in contrast to prior surveys that mainly focus on attacks and their

detection methods. Through an exhaustive literature review, we provide a novel

taxonomy for poisoning attacks, formalise its dimensions, and accordingly

organise 30+ attacks described in the literature. Further, we review 40+

countermeasures to detect and/or prevent poisoning attacks, evaluating their

effectiveness against specific types of attacks. This comprehensive survey

should serve as a point of reference for protecting recommender systems against

poisoning attacks. The article concludes with a discussion on open issues in the

field and impactful directions for future research.

|

|

|

|

II. List of Poisoning Attacks (Sortable)

|

|

|

|

III. List of Countermeasures (Sortable)

|

| Paper |

Venue |

Year |

Type |

Data |

Code |

| Poisoning GNN-based recommender systems with

generative surrogate-based attacks |

ACM TOIS |

2023 |

Model-intrinsic |

FR, ML, AMCP, LF |

- |

| Shilling Black-Box Recommender Systems by

Learning to Generate Fake User Profiles |

IEEE Trans. Neural Netw. Learn. Syst |

2022 |

Model-agnostic |

ML, FT, YE, AAT |

- |

| Knowledge enhanced Black-box Attacks for

Recommendations |

KDD |

2022 |

Model-agnostic |

ML, BC, LA |

- |

| LOKI: A Practical Data Poisoning Attack

Frameworkagainst Next Item Recommendations |

TKDE |

2022 |

Model-agnostic |

ABT, St,GOW |

- |

| Pipattack: Poisoning federated recommender

systems for manipulating item promotion |

WSDM |

2022 |

Model-intrinsic |

ML, AMCP |

- |

| Poisoning Deep Learning based Recommender

Model in Federated Learning Scenarios |

IJCAI |

2022 |

Model-intrinsic |

ML, ADM |

Python |

| FedAttack: Effective and Covert Poisoning

Attack on Federated Recommendation via Hard Sampling

|

Arxiv |

2022 |

Model-intrinsic |

ML, ABT |

Python |

| UA-FedRec: Untargeted Attack on Federated

News Recommendation |

Arxiv |

2022 |

Model-intrinsic |

MIND, Feeds |

Python |

| Triple Adversarial Learning for Influence

based Poisoning Attack in Recommender Systems |

KDD |

2021 |

Model-agnostic |

ML, FT |

Python

|

| Reverse Attack: Black-box Attacks on

Collaborative Recommendation |

CSS |

2021 |

Model-agnostic |

ML, NF, AMB & ADM, TW, G+, CIT |

- |

| Attacking Black-box Recommendations via

Copying Cross-domain User Profiles |

ICDE |

2021 |

Model-agnostic |

ML, NF |

- |

| Simulating real profiles for shilling

attacks: A generative approach |

KBS |

2021 |

Model-agnostic |

ML |

- |

| Ready for emerging threats to recommender

systems? A graph convolution-based generative shilling

attack |

IS |

2021 |

Model-agnostic |

DB, CI |

Python

|

| Data poisoning attacks on neighborhoodbased

recommender systems |

ETT |

2021 |

Model-intrinsic |

FT, ML, AMV |

- |

| Data Poisoning Attack against Recommender

System Using Incomplete and Perturbed Data |

KDD |

2021 |

Model-intrinsic |

ML, AIV |

- |

| Black-Box Attacks on Sequential Recommenders

via Data-Free Model Extraction |

RecSys |

2021 |

Model-intrinsic |

ML,St, ABT |

Python

|

| Poisoning attacks against knowledge

graph-based recommendation systems using deep reinforcement

learning |

Neural. Comput. Appl. |

2021 |

Model-intrinsic |

ML, FTr |

- |

| Adversarial Item Promotion: Vulnerabilities

at the Core of Top-N Recommenders that Use Images to Address

Cold Start |

WWW |

2021 |

Model-intrinsic |

AMMN, TC |

Python |

| Data poisoning attacks to deep learning based

recommender systems |

arXiv |

2021 |

Model-intrinsic |

ML, LA |

- |

| Practical data poisoning attack against

next-item recommendation |

WWW |

2020 |

Model-intrinsic |

ABT |

- |

| Influence function based data poisoning

attacks to top-n recommender systems |

WWW |

2020 |

Model-intrinsic |

YE, ADM |

- |

| Attacking recommender systems with augmented

user profiles |

CIKM |

2020 |

Model-agnostic |

ML, FT, AAT |

- |

| Poisonrec: an adaptive data poisoning

framework for attacking black-box recommender systems

|

ICDE |

2020 |

Model-agnostic |

St, AMCP, ML |

- |

| Revisiting adversarially learned injection

attacks against recommender systems |

RecSys |

2020 |

Model-agnostic |

GOW |

Python

|

| Adversarial attacks on an oblivious

recommender |

RecSys |

2019 |

Model-intrinsic |

ML |

- |

| Targeted poisoning attacks on social

recommender systems |

GLOBECOM |

2019 |

Model-intrinsic |

FT |

- |

| Data poisoning attacks on cross-domain

recommendation |

CIKM |

2019 |

Model-intrinsic |

NF, ML |

- |

| Poisoning attacks to graph-based recommender

systems |

ACSAC |

2018 |

Model-intrinsic |

ML, AIV |

- |

| Fake Co-visitation Injection Attacks to

Recommender Systems |

NDSS |

2017 |

Model-intrinsic |

YT, eB, AMV, YE, LI |

- |

| Data poisoning attacks on factorization-based

collaborative filtering |

NIPS |

2016 |

Model-intrinsic |

ML |

Python

|

| Shilling recommender systems for fun and

profit |

WWW |

2004 |

Model-agnostic |

ML |

|

|

|

IV. Datasets

|

| Dataset |

Name |

| AAT |

Amazon Automotive |

| ABT |

Amazon Beauty |

| ADM |

Amazon Digital Music |

| AIV |

Amazon Instant Video |

| AMB |

Amazon Book |

| AMMN |

Amazon Men |

| AMV |

Amazon Movie |

| AMCP |

Amazon Cell-phone |

| AMP |

Amazon Product |

| BC |

Book-Crossing |

| CI |

Ciao |

| CIT |

Citation Network |

| DB |

Douban |

| eB |

eBay |

| EM |

Eachmovie |

| EP |

Epinions |

| Feeds |

Microsoft news App |

| FR |

FRappe - Mobile App Recommendations |

| FT |

Film Trust |

| Ftr |

Fund Transactions |

| G+ |

Google+ |

| GOW |

Gowalla |

| MIND |

Microsoft News |

| LA |

Last.fm |

| LI |

Linkedin |

| LT |

LibraryThing |

| ML |

MovieLens |

| NF |

Netflix |

| St |

Steam |

| TC |

Tradesy |

| Trip |

TripAdvisor |

| TW |

Twitter [Code] |

| YE |

Yelp |

| YT |

YouTube |

| SYNC |

Synthetic datasets |

|

|

V. Citations

|

|

Source: https://github.com/tamlhp/awesome-recsys-poisoning

|

|

Paper: https://arxiv.org/abs/2404.14942

|

|

|

|

|

|

|

|

© 2024

Awesome Recsys Poisoning

|

|

|

|